Misinformation has a real impact on the world.

Be WISER and help slow the spread of misinformation.

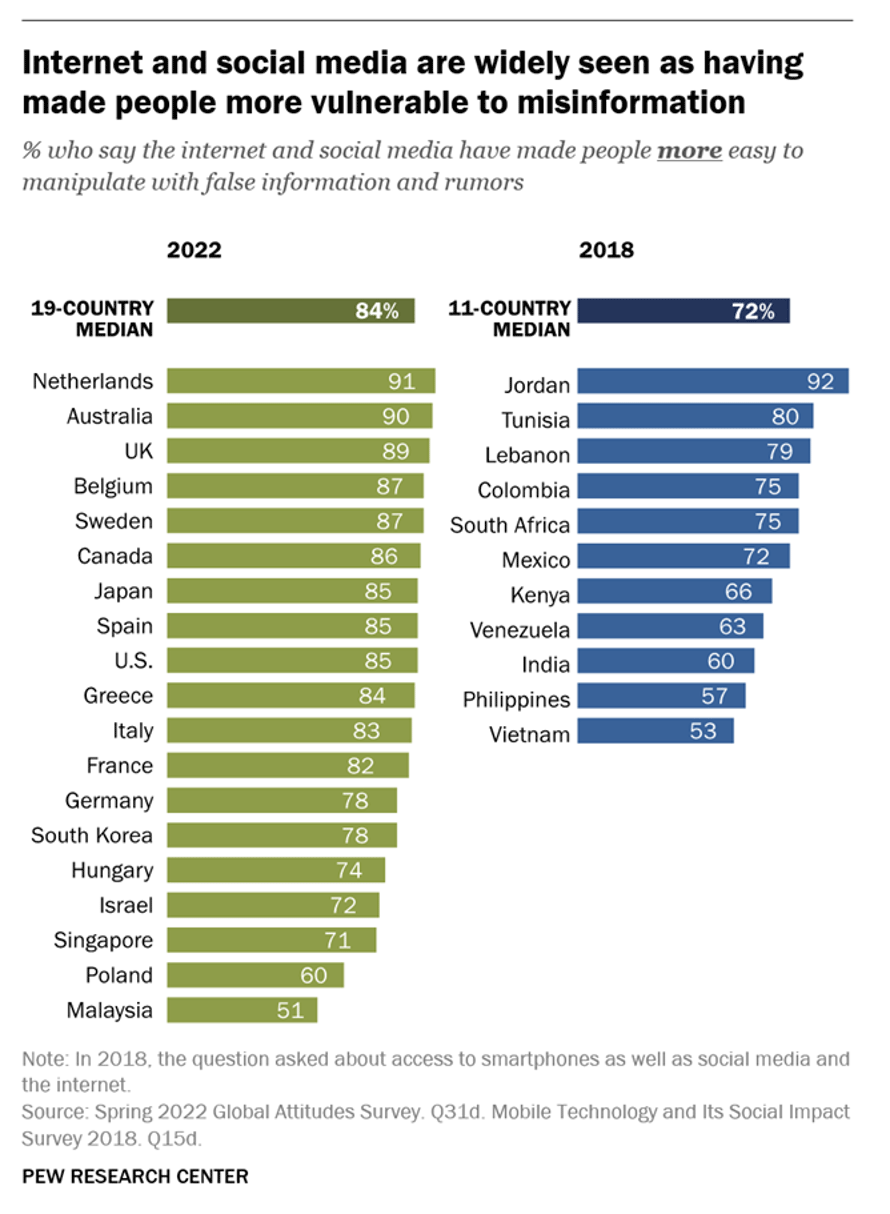

From climate change denial to inaccurate plastics recycling advice, misinformation and disinformation campaigns have had real effects on the environment, and fake news is only spreading faster and further with technology platforms like social media. That’s why the SEED Institute is disseminating a research-informed solution to combat the spread of misinformation on social media.

| Nearly 75% of Americans have been exposed to fake news (Statista, 2024)

| 38% of people admit to having accidentally shared false information online (Statista, 2024)

| Sites that publish misinformation net over $2 billion in global advertising revenue (Statista, 2024)

| Levels of perceived misinformation around climate change are about three times higher in the US (35%) than they are in Japan (12%; Neuman, 2023)

While many people think they do a good job spotting what’s true and what’s false, that’s a really tough task. And it’s not just your cranky uncle or your sweet abuelita who has trouble! Research shows that younger people are also susceptible to being fooled by misinformation and accidentally sharing it. 1

But so what? People get a few memes that might not tell the truth? Actually, misinformation has real consequences, from disrupting the fight against climate change, 2 to spreading dubious financial advice that could hurt your wallet, 3 to discouraging people from getting vaccinated, 4 to undermining the foundations of democracy. 5

Aren’t you tired of being manipulated?

How can you protect yourself and others? Be WISER.

Click each letter below to learn ways you could put the WISER framework into practice. What other ways can you think of?

-Vosoughi, Roy, and Aral (2018), Science

Other Resources

Here are some other resources on misinformation you might want to check out:

- Test Yourself: Which Faces Were Made by A.I.?

This game from The New York Times challenges you to put your skills to the test to decide whether a photo is real or AI. - University of Cambridge Misinformation Susceptibility Test

Can you spot misinformation when you see it? Evaluate headlines to decide whether they are true or false. If you are under 18, select ‘No, I don’t consent’ on the first screen when you are asked about participating in a study (you can still take the quiz without participating in the study). Either a 20-question (MIST-20) or a 16-question (MIST-16) version of the test are available. - Case Studies

Central Washington University presents a number of case studies about detecting false news online. Would you have caught these clues? - MediaWise Teen Fact-Checking Network

Teens discuss tips for spotting and managing misinformation online. Created by the Poynter Foundation in collaboration with PBS NewsHour Student Reporting Labs.

Selected Research

- Title: “Misinformation Interventions are Common, Divisive, and Poorly Understood.”

Citation: Saltz, E., Barari, S., Leibowicz, C., & Wardle, C. (2021). Misinformation interventions are common, divisive, and poorly understood. Harvard Kennedy School (HKS) Misinformation Review, 2(5).

Abstract: Social media platforms label, remove, or otherwise intervene on thousands of posts containing misleading or inaccurate information every day. Who encounters these interventions, and how do they react? A demographically representative survey of 1,207 Americans reveals that 49% have been exposed to some form of online misinformation intervention. However, most are not well-informed about what kinds of systems, both algorithmic and human, are applying these interventions: 40% believe that content is mostly or all checked, and 17.5% are not sure, with errors attributed to biased judgment more than any other cause, across political parties. Although support for interventions differs considerably by political party, other distinct traits predict support, including trust in institutions, frequent social media usage, and exposure to “appropriate” interventions. - Title: “Fighting Biased News Diets: Using News Media Literacy Interventions to Stimulate Online Cross-Cutting Media Exposure Patterns.”

Citation: van der Meer, T. G., & Hameleers, M. (2021). Fighting biased news diets: Using news media literacy interventions to stimulate online cross-cutting media exposure patterns. New Media & Society, 23(11), 3156-3178.

Abstract: Online news consumers have the tendency to select political news that confirms their prior attitudes, which may further fuel polarized divides in society. Despite scholarly attention to drivers of selective exposure, we know too little about how healthier cross-cutting news exposure patterns can be stimulated in digital media environments. Study 1 (N = 553) exposed people to news media literacy (NML) interventions using injunctive and descriptive normative language. The findings reveal the conditional effect of such online interventions: Participants with pro-immigration attitudes engaged in more cross-cutting exposure while the intervention was only to a certain extent effective for Democrats, ineffective for Republicans, and even boomeranged for partisans with anti-immigration attitudes. In response to these findings, Study 2 (N = 579) aimed to design interventions that work across issue publics and party affiliation. We show that NML messages tailored on immigration beliefs can be effective across the board. These findings inform the design of more successful NML interventions. - Title: “Fighting COVID-19 Misinformation on Social Media: Experimental Evidence for a Scalable Accuracy-Nudge Intervention.”

Citation: Pennycook, G., McPhetres, J., Zhang, Y., Lu, J.G., & Rand, D.G. (2020). “Fighting COVID-19 Misinformation on Social Media: Experimental Evidence for a Scalable Accuracy-Nudge Intervention,” Psychological Science 31, no. 7 (June 30), 770–80.

Abstract: Across two studies with more than 1,700 U.S. adults recruited online, we present evidence that people share false claims about COVID-19 partly because they simply fail to think sufficiently about whether or not the content is accurate when deciding what to share. In Study 1, participants were far worse at discerning between true and false content when deciding what they would share on social media relative to when they were asked directly about accuracy. Furthermore, greater cognitive reflection and science knowledge were associated with stronger discernment. In Study 2, we found that a simple accuracy reminder at the beginning of the study (i.e., judging the accuracy of a non-COVID-19-related headline) nearly tripled the level of truth discernment in participants’ subsequent sharing intentions. Our results, which mirror those found previously for political fake news, suggest that nudging people to think about accuracy is a simple way to improve choices about what to share on social media. - Title: “Effects of Credibility Indicators on Social Media News Sharing Intent.”

Citation: Yaqub, W., Kakhidze, O., Brockman, M. L., Memon, N., & Patil, S. (2020, April). Effects of credibility indicators on social media news sharing intent. In Proceedings of the 2020 chi conference on human factors in computing systems (pp. 1-14).

Abstract: In recent years, social media services have been leveraged to spread fake news stories. Helping people spot fake stories by marking them with credibility indicators could dissuade them from sharing such stories, thus reducing their amplification. We carried out an online study (N = 1,512) to explore the impact of four types of credibility indicators on people's intent to share news headlines with their friends on social media. We confirmed that credibility indicators can indeed decrease the propensity to share fake news. However, the impact of the indicators varied, with fact checking services being the most effective. We further found notable differences in responses to the indicators based on demographic and personal characteristics and social media usage frequency. Our findings have important implications for curbing the spread of misinformation via social media platforms. - Title: “The Elusive Backfire Effect: Mass Attitudes’ Steadfast Factual Adherence.”

Citation: Wood, T., & Porter, E. (2019). The elusive backfire effect: Mass attitudes’ steadfast factual adherence. Political Behavior, 41, 135-163.

Abstract: Can citizens heed factual information, even when such information challenges their partisan and ideological attachments? The “backfire effect,” described by Nyhan and Reifler (Polit Behav 32(2):303–330. https://doi.org/10.1007/s11109-010-9112-2, 2010), says no: rather than simply ignoring factual information, presenting respondents with facts can compound their ignorance. In their study, conservatives presented with factual information about the absence of Weapons of Mass Destruction in Iraq became more convinced that such weapons had been found. The present paper presents results from five experiments in which we enrolled more than 10,100 subjects and tested 52 issues of potential backfire. Across all experiments, we found no corrections capable of triggering backfire, despite testing precisely the kinds of polarized issues where backfire should be expected. Evidence of factual backfire is far more tenuous than prior research suggests. By and large, citizens heed factual information, even when such information challenges their ideological commitments. - Title: “Fighting Misinformation on Social Media Using Crowdsourced Judgments of News Source Quality.”

Citation: Pennycook, G., & Rand, D. G. (2019). Fighting misinformation on social media using crowdsourced judgments of news source quality. Proceedings of the National Academy of Sciences, 116(7), 2521-2526.

Abstract: Reducing the spread of misinformation, especially on social media, is a major challenge. We investigate one potential approach: having social media platform algorithms preferentially display content from news sources that users rate as trustworthy. To do so, we ask whether crowdsourced trust ratings can effectively differentiate more versus less reliable sources. We ran two preregistered experiments (n = 1,010 from Mechanical Turk and n = 970 from Lucid) where individuals rated familiarity with, and trust in, 60 news sources from three categories: (i) mainstream media outlets, (ii) hyperpartisan websites, and (iii) websites that produce blatantly false content (“fake news”). Despite substantial partisan differences, we find that laypeople across the political spectrum rated mainstream sources as far more trustworthy than either hyperpartisan or fake news sources. Although this difference was larger for Democrats than Republicans—mostly due to distrust of mainstream sources by Republicans—every mainstream source (with one exception) was rated as more trustworthy than every hyperpartisan or fake news source across both studies when equally weighting ratings of Democrats and Republicans. Furthermore, politically balanced layperson ratings were strongly correlated (r = 0.90) with ratings provided by professional fact-checkers. We also found that, particularly among liberals, individuals higher in cognitive reflection were better able to discern between low- and high-quality sources. Finally, we found that excluding ratings from participants who were not familiar with a given news source dramatically reduced the effectiveness of the crowd. Our findings indicate that having algorithms up-rank content from trusted media outlets may be a promising approach for fighting the spread of misinformation on social media. - Title: “The Implied Truth Effect: Attaching Warnings to a Subset of Fake News Headlines Increases Perceived Accuracy of Headlines Without Warnings.”

Citation: Pennycook, G., Bear, A., Collins, E. T., & Rand, D. G. (2020). The implied truth effect: Attaching warnings to a subset of fake news headlines increases perceived accuracy of headlines without warnings. Management Science, 66(11), 4944-4957.

Abstract: What can be done to combat political misinformation? One prominent intervention involves attaching warnings to headlines of news stories that have been disputed by third-party fact-checkers. Here we demonstrate a hitherto unappreciated potential consequence of such a warning: an implied truth effect, whereby false headlines that fail to get tagged are considered validated and thus are seen as more accurate. With a formal model, we demonstrate that Bayesian belief updating can lead to such an implied truth effect. In Study 1 (n = 5,271 MTurkers), we find that although warnings do lead to a modest reduction in perceived accuracy of false headlines relative to a control condition (particularly for politically concordant headlines), we also observed the hypothesized implied truth effect: the presence of warnings caused untagged headlines to be seen as more accurate than in the control. In Study 2 (n = 1,568 MTurkers), we find the same effects in the context of decisions about which headlines to consider sharing on social media. We also find that attaching verifications to some true headlines—which removes the ambiguity about whether untagged headlines have not been checked or have been verified—eliminates, and in fact slightly reverses, the implied truth effect. Together these results contest theories of motivated reasoning while identifying a potential challenge for the policy of using warning tags to fight misinformation—a challenge that is particularly concerning given that it is much easier to produce misinformation than it is to debunk it. - Title: “Three Preventative Interventions to Address the Fake News Phenomenon on Social Media.”

Citation: Eccles, D. A., Kurnia, S., Dingler, T., & Geard, N. (2021). "Three Preventative Interventions to Address the Fake News Phenomenon on Social Media" ACIS 2021 Proceedings, 51.

Abstract: Fake news on social media undermines democracies and civil society. To date the research response has been message centric and reactive. This does not address the problem of fake news contaminating social media populations with disinformation, nor address the fake news producers and disseminators who are predominantly human social media users. Our research proposes three preventative interventions - two that empower social media users and one social media structural change to reduce the spread of fake news. Specifically, we investigate how i) psychological inoculation; ii) digital media literacy and iii) Transaction Cost Economy safeguarding through reputation ranking could elicit greater cognitive elaboration from social media users. Our research provides digital scalable preventative interventions to empower social media users with the aim to reduce the population size exposed to fake news.

1Arin, K. P., Mazrekaj, D., & Thum, M. (2023). Ability of detecting and willingness to share fake news. Scientific Reports, 13(1), Article 1.

2Fleming, M. (2022, May 16). Rampant climate disinformation online is distorting dangers, delaying climate action. https://medium.com/we-the-peoples/rampant-climate-disinformation-online-is-distorting-dangers-delaying-climate-action-375b5b11cf9b

3https://www.vox.com/the-goods/22229551/tiktok-personal-finance-day-trading-tesla-scam

4Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., & Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nature Human Behaviour, 5(3), Article 3. https://doi.org/10.1038/s41562-021-01056-1

5National Intelligence Council. (2021). Foreign Threats to the 2020 US Federal Elections. https://www.dni.gov/files/ODNI/documents/assessments/ICA-declass-16MAR21.pdf